The following are extracts of the paper I am writing. For implementation please see the tutorials page.

One of difficulties in robotics and nature is walking. There are countless videos of young children falling over on the internet.

Learning to walk is a form of optimization. Some animals learn quicker than others, which we will explore more in this paper.

Within Robotics, it would take too much time to program every terrain type and what to do with every sensor reading.

This is why self training and optimization is key for successful robotics which can be deployed to a range of tasks.

Genetic Algorithms use random mutations to within a Genotype which is assessed using a fitness function.

In this case fitness being the Genotype which walks the best.

The shorter the distance between the robot and the wall in front, the better the fitness score will be.

The angle at which the robot stands will effect its score.

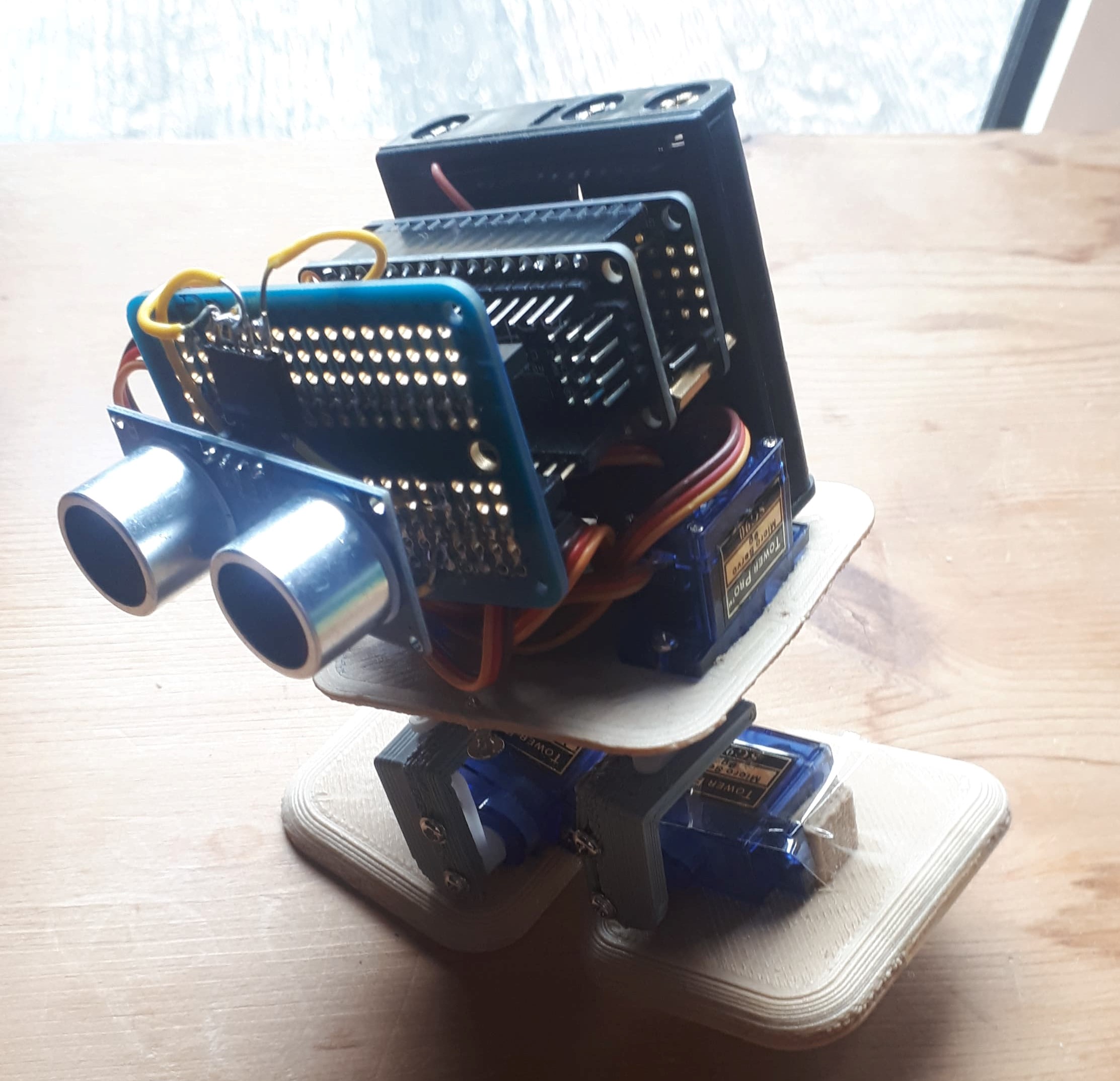

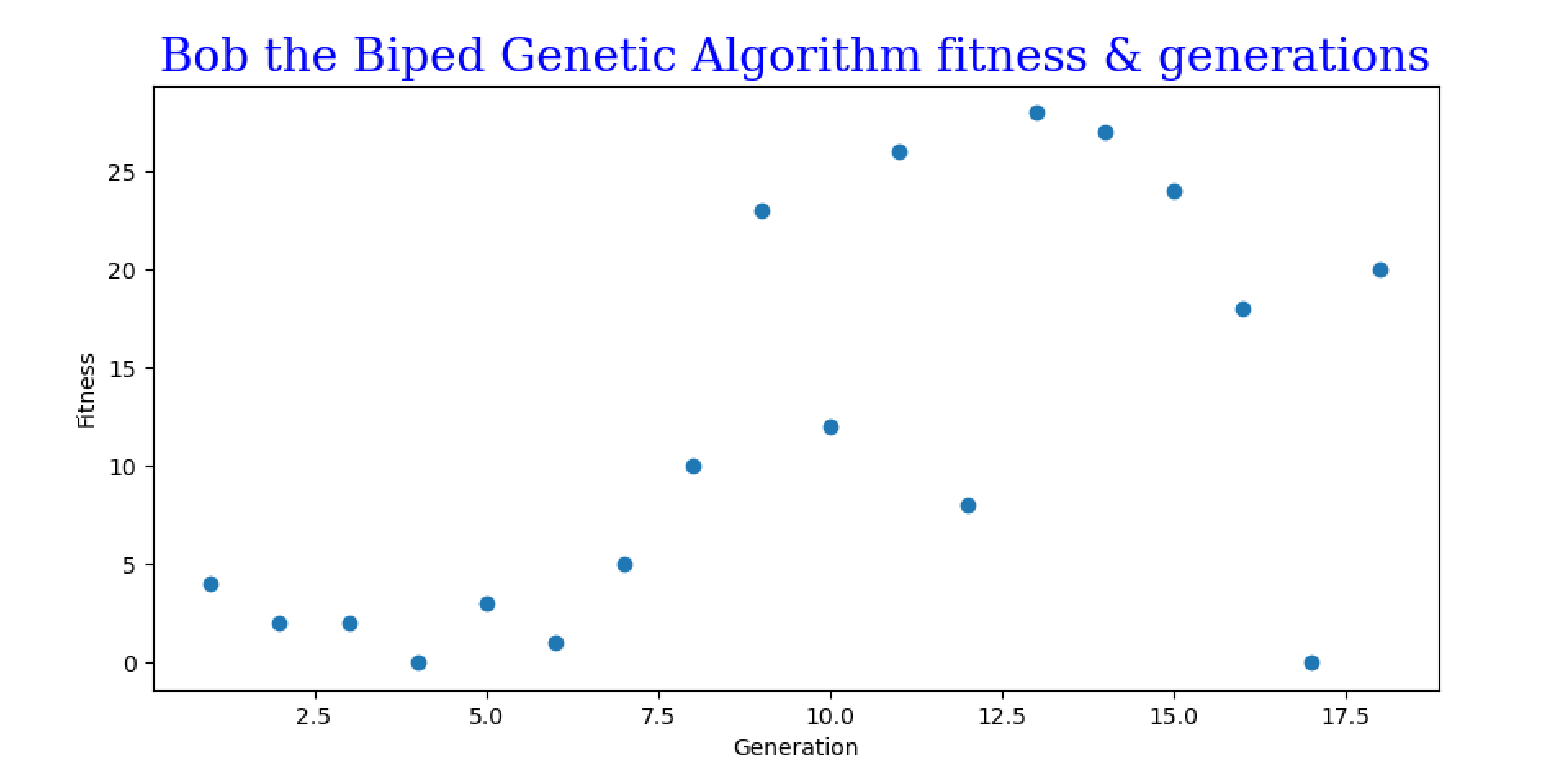

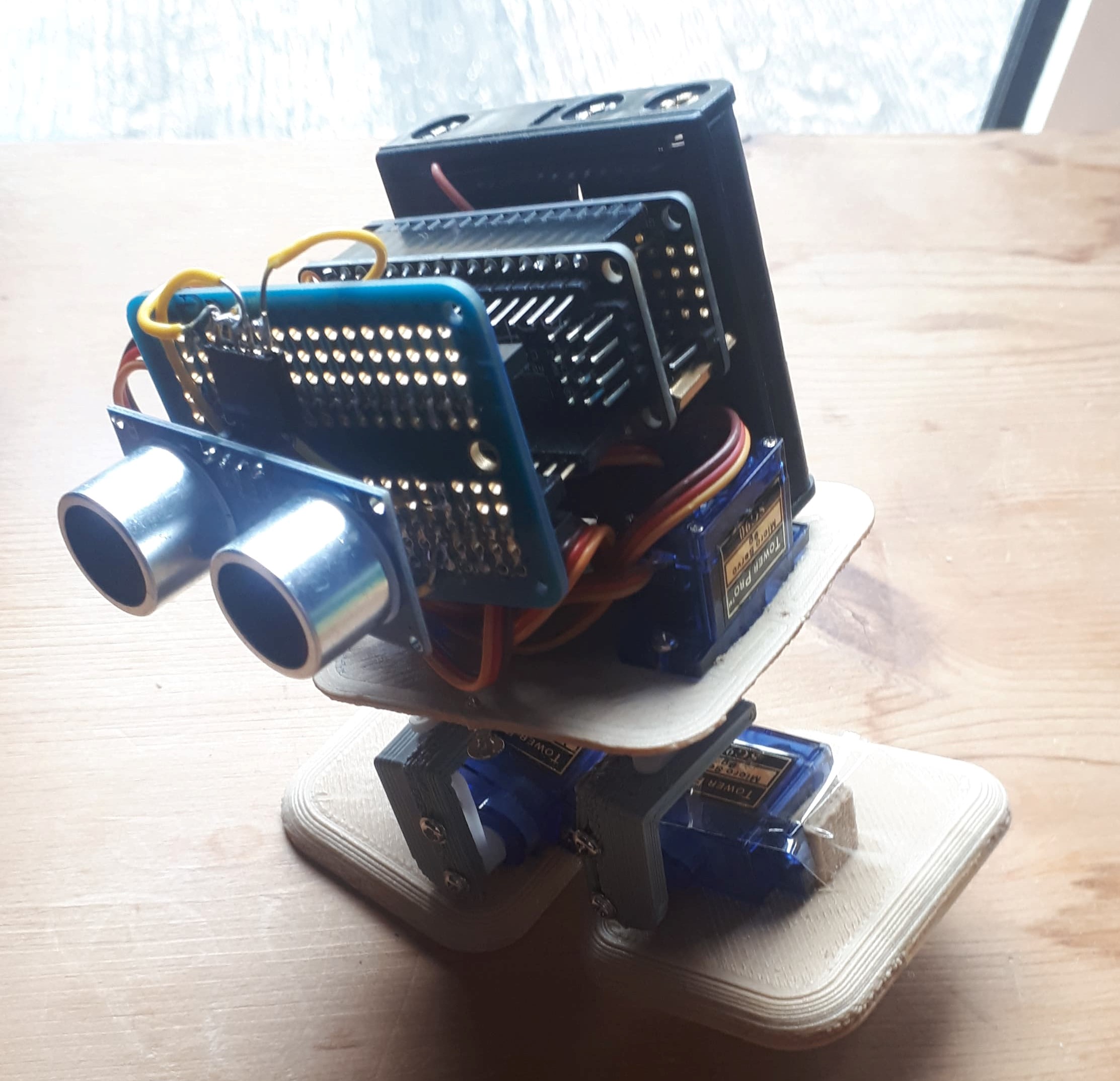

Bob the biped is a 3D printed chassis. It uses 4 servos, and has inner dimensions of 58x54x54 mm.

Hardware will be constricted to a smaller area, therefore the choice of controller will be the Adafruit Feather M0 for its Python running abilities.

This chassis was chosen for its cheap and low energy consumption, which allowed more time to focus on the algorithm itself.

The Adafruit Feather M0 had limited memory therefore unable to use the servo and gyroscope libraries together.

This left only the range finder to measure fitness.

When implementing a genetic algorithm for simulation such as the hill climber problem, we can try numerous genotypes and reset every-time it goes wrong. We do not have this luxury with robotics as when it falls over we will be stuck until it is prop-ed back in position. We will have pre-programmed a stand up function to revert it to its start position.

Ideally a robot would need to optimize its own stand-up function, however, for the sake of this experiment we will only focus on walking.

The implementation of the algorithm is genetic algorithms in their simplest form.

The algorithm will define an array of motor angles and a genotype defining whether to move the angles at the same time.

These instructions will be in an array of a set size, and will randomly mutate sequential steps, and randomly mutate servo usage within these steps.

For simplicity the Motors will have a set rotating angle of either 10 or -10 degrees. The algorithm will mutate these to switch around too.

This algorithm may not be the quickest, and is likely to find sub-optimal solutions due to its simplistic design.

It will be better for size capacity and run well on a micro-controller.

Results

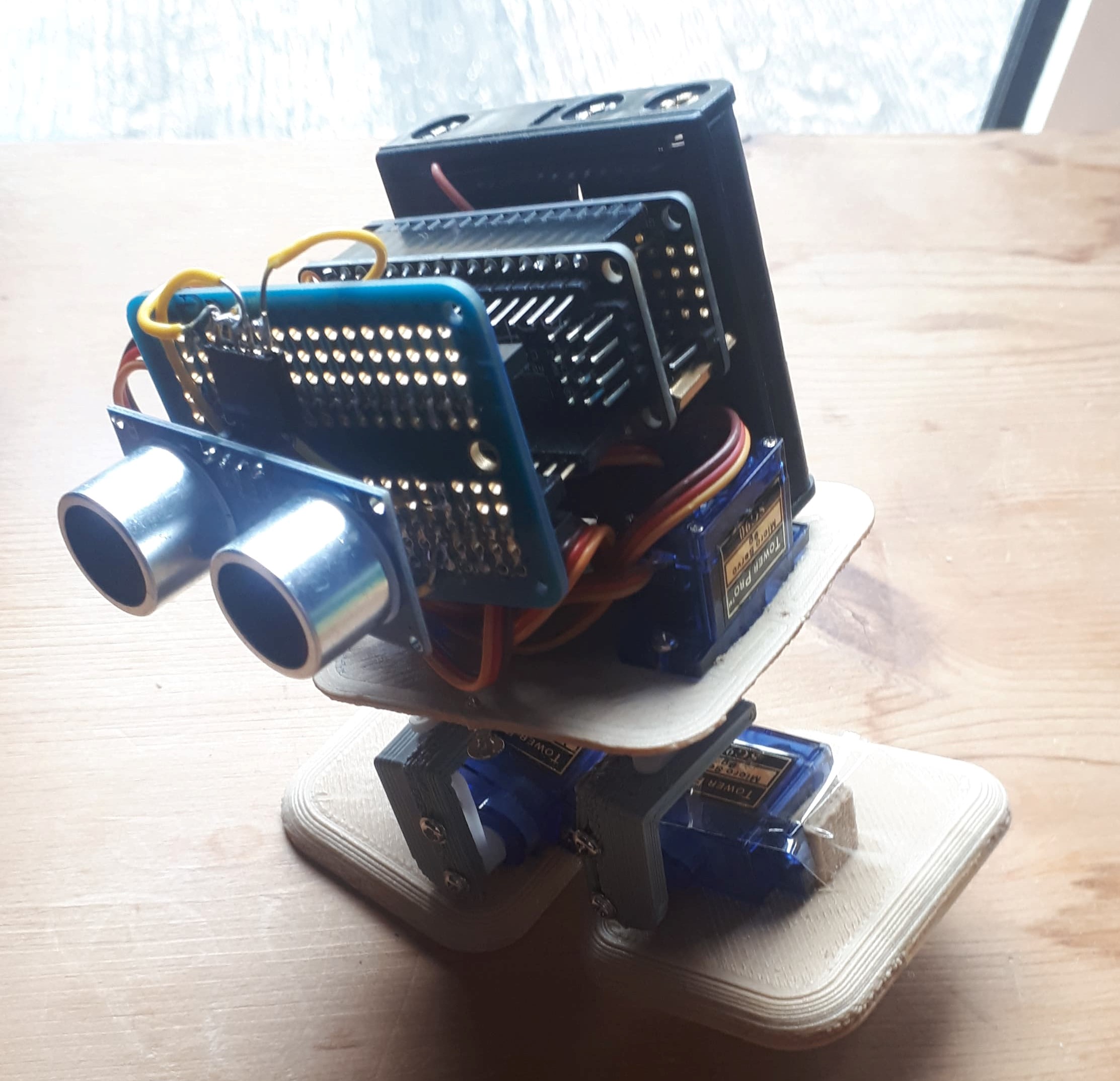

The experiment resulted in the robot falling over, but learning. It was tested using a mutation rate of 3, and a mutation rate of 1. We found that the mutation rate of 1 led to a more stable walking pattern. The bot did learn to take small steps. The final recorded experiment used 18 generations and a mutation rate of 1.

Here is a link to a speed run of the robot walking and improving its walk. It starts off with a randomized sequence.

The y-axis shows the measure of fitness by the advancement of the robot in millimeters. From the graph we see fitness peaked at advancing 28mm. By the end, some less fit mutations occurred resulting in the final mutation only advancing 20mm.

The y-axis shows the measure of fitness by the advancement of the robot in millimeters. From the graph we see fitness peaked at advancing 28mm. By the end, some less fit mutations occurred resulting in the final mutation only advancing 20mm.

Home

About

Downloads

Contact

Home

About

Downloads

Contact